To overcome the challenge of creating highly realistic scenarios, KPIT has built a process/framework to convert drive data into testable scenarios to help execute high-quality and accurate testing. The actual test drive data is taken for analysis and interesting events for the Automated driving functions are mined. These interesting events are taken further for processing and conversion into synthetic scenarios. AI Technologies like, SLAM is used for vehicle trajectory identification, Deep Learning algorithms are used for identification of relevant traffic participants, their classification and trajectory estimations.

Let’s look take a detailed look at the various processes and AI techniques used to extract and derive high-quality scenarios that assure V&V coverage for automated driving along with exhaustive use case testing

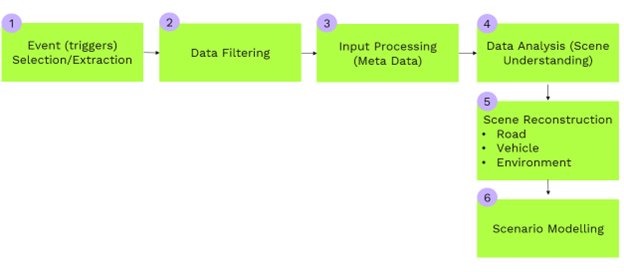

1. High level workflow.

The Driving logs are analyzed to identify critical or interesting events that happened during real road testing. The identified critical events are then processed to create or derive synthetic scenarios that can be used for validation through simulation (data driven validation).

2. Steps to create synthetic scenarios from real road driving data

- Event Selection/Extraction: This first step is to identify critical events. The drive data is subjected to a complex algorithm which mines the data to identify critical events that occurred during the drive. For example, a feature did not behave as expected, or a feature was activated late/early, an event of a late brake etc.

- Data Filtering: Once the event is identified, the next step is to filter appropriate and relevant data required for processing. Required amount of data from all required sources (like Vehicle logs, Sensor logs etc.,) of input available are extracted.

- Input Data Processing: Data is checked for correctness and checked for synchronization between different input data streams. If the data found is not in sync, then different methodologies are applied for synchronization between data. For example, at the start of the drive driver switches on the wiper which is recorded in the video. The timestamp is compared with the wiper status signal and the streams are adjusted to relevant timestamps and data is synchronized.

- Data Analysis or Scene Understanding: This is the most critical step where different parameters of the subject vehicles (speed, position w.r.t to road, yaw angle etc.), traffic vehicles (Speed, relative distance w.r.t to subject vehicle, position, orientation etc.), road parameters (No of lanes, Junctions if any etc.) are derived for further processing. The method for deriving the parameters mainly depends on the availability of the input data. Typically, this process becomes more challenging if the input data is very limited. In such cases AI techniques are implemented

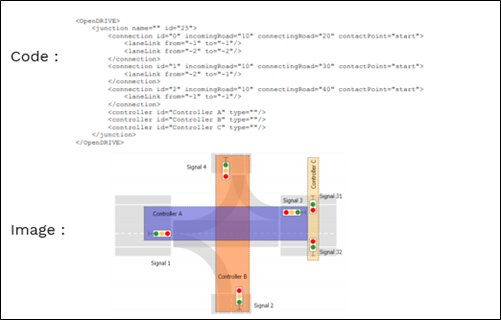

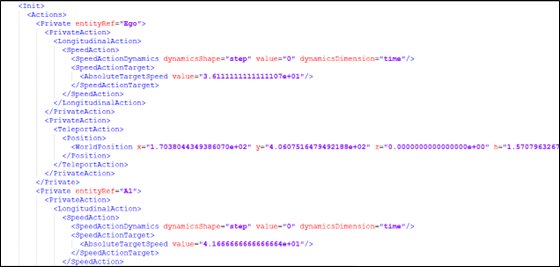

- Scene Reconstruction: The parameters derived in step ‘d’, are categorized and segmented into a data stream that is required for the scene construction as per Open standards (OpenDRIVE and OpenSCENARIO). More and more organizations are migrating to open standards as it gives the benefit of using any simulator with minimal modifications to the scenario model. OpenSCENARIO is a standard format for defining vehicle maneuvers and OpenDRIVE is a standard format for defining the road infrastructure.

- Scenario Modelling: The process where the Open Standard data is generated for outputs of the above step and is made compatible with required Simulator. The Open Standard data is compatible with all commercially available simulators as well as open-source simulators where the scenario would be used and visualized.

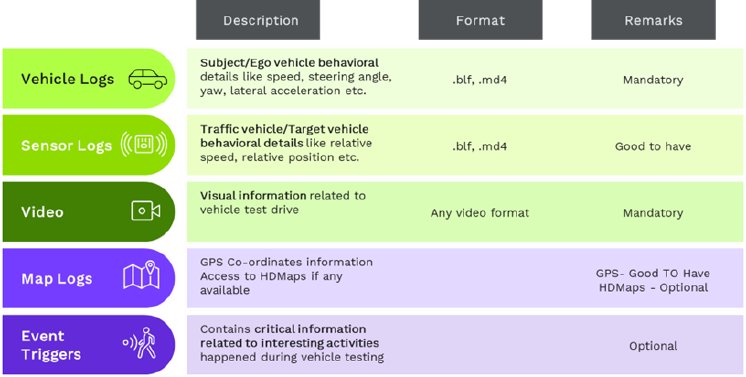

Data from various sources is required to help derive scenarios from real world data

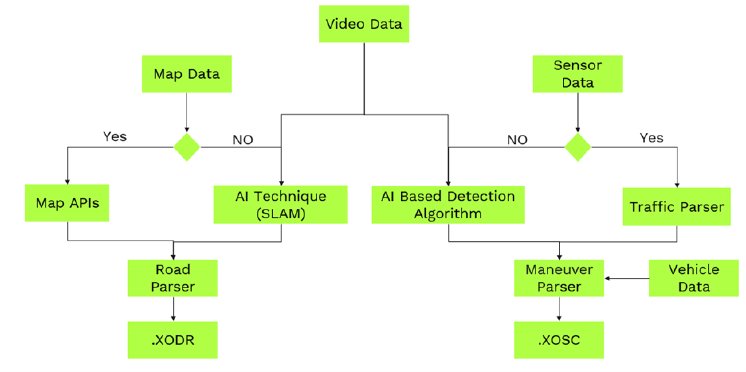

4. Implementing AI techniques

The methodology for scene derivation depends on the input data availability.

If most of the inputs as required in the Table 1 are available then the process becomes simple and highly automated, with the help of parsers and automation scripts the scenarios can be converted into synthetic scenarios from the real-world drive data.

Typically, this is not the case and the inputs provided are limited and thus the process becomes more complex. This is when AI helps in streamlining the process.

Consider an example where only video data is available as input without other data like sensor or vehicle logs. In this scenario AI technique like Simultaneous Localization and Mapping (SLAM) can be used for deriving subject vehicle parameters. SLAM uses a reference point in given plan to construct or updating a map of an unknown environment while simultaneously Keeping track of an agents (for example subject vehicle) location within it. With SLAM we will be able to track the subject vehicle and derive its location and position information. SLAM also has some limitations i.e. it cannot be used on longer sets of data, as the error of estimation increases. To Avoid this the data set is segmented, calculated for smaller segments, and then stitched back with minimal computational errors.

Having solved the problem of subject vehicle estimation the next challenge would be deriving traffic parameters and road geometry This can be mitigated using Deep Learning based algorithm to derive traffic parameters like its position, classification, speed etc. and road parameters like lane information. A well-trained DL algorithm will be run on the video to identify the required traffic participants and road information.

All these parameters are then mapped to respective tags in the OpenDrive and OpenScenario with right transformations to generate a scenario file. This scenario file will be then used in a simulator to visualize the scenario. With the availability of the Camera configuration parameters (Intrinsic and Extrinsic) used for data collection, a degree of accurate scenario models can be generated.

The above techniques help in generating high quality realistic scenario models for real road data, which can be used for Validation. The validation technique can be closed loop testing of an AD stack or an AD function.

In summary, implementing these AI techniques for scenario creation from real world data helps generate high quality scenarios and additionally brings multiple benefits like:

- time savings of up to 50% due to automation,

- realistic scenarios creation with limited data sets (e.g., only camera data)

- and parametrization of these realistic scenarios allows for accurate testing of millions of scenarios ensuring the required coverage.